FERG

We investigate the human perception of facial expressions and how that perception is affected by the actions of the face and its different parts. We've developed a perceptual model of facial expressions that predicts how viewers will respond to facial expressions, and using that model we've built a plugin for a 3D animation program that gives interactive, real-time feedback and suggestions for improving clarity of facial expressions.

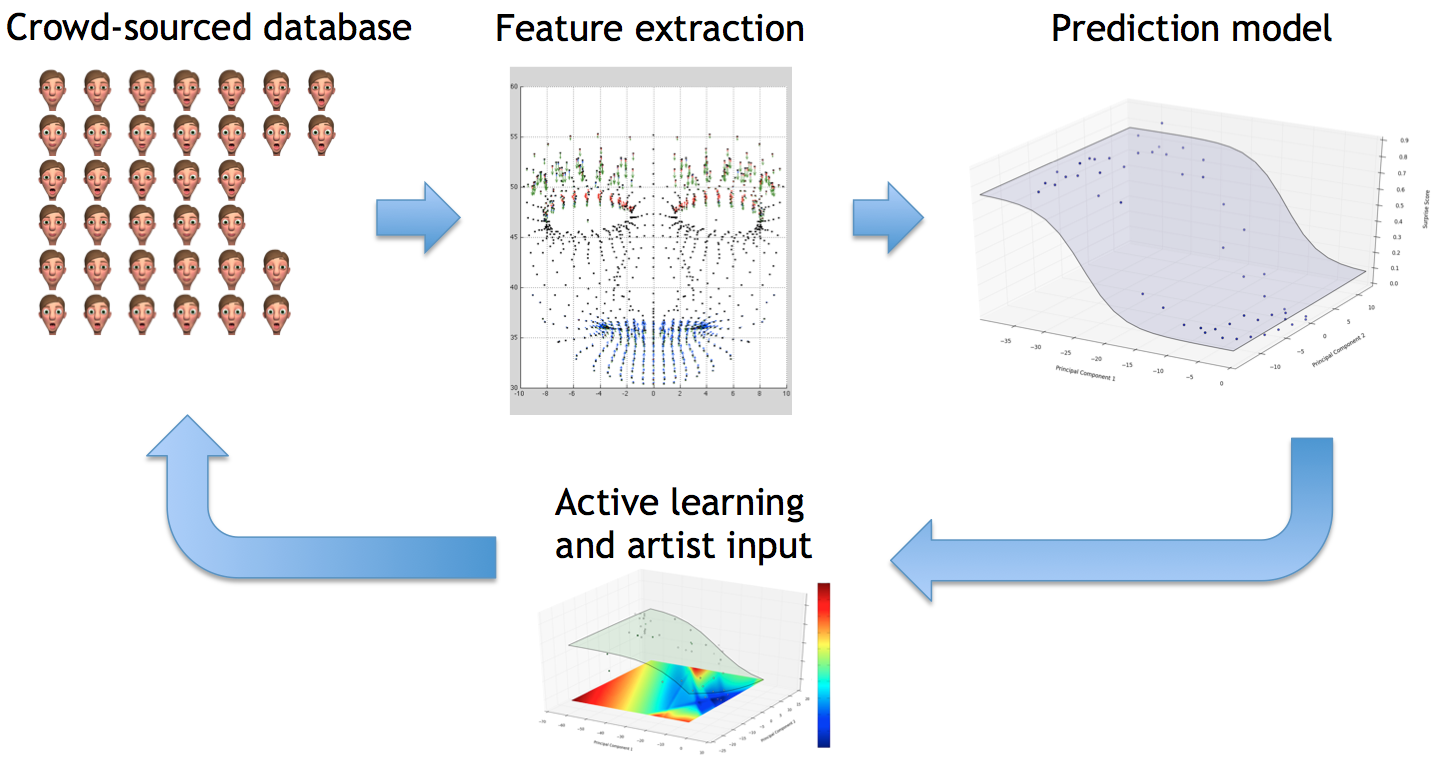

We leverage crowd-sourcing to create a database of facial expressions on a stylized character that were perceptually evaluated. Viewers are presented with an expression and asked to choose which emotion it depicts, or they are asked to rate its intensity in comparison to another expression.

Our perceptual model learns from the data to predict with significant accuracy how newly created expressions will be perceived. The model can also generate new expressions with desired elements within its knowledge base, such as being recognized as a specific emotion with a specific intensity level.